Citations, Altmetrics and Researcher Profiles

Measure the performance of your research outputs

There are a number of ways of measuring and describing the performance of individual research outputs. These can be broadly categorised as citation metrics, altmetrics and indicators of esteem. Determining where to focus your attention will depend on your discipline, the type of research output and an understanding of the likely performance strengths of an individual output.

Find suggested performance measures for a :

Journal article

- Citations

- CNCI & FWCI

- Percentile

- Highly cited articles

- Altmetrics

- Media & parliamentary attention

- Policy mentions

- Patent mentions

The number of citations received by an article is an indicator of the level of engagement it has achieved. To find who is citing a journal article, search citation databases such as Web of Science, Scopus, Dimensions, or Google Scholar.

- Do a title search for your article

- Look for Times Cited or Cited by

- Link to the list of citing sources

This is how the citation counts will appear in each database:

- Web of Science

- Scopus

- Google Scholar

- Dimensions

Track citations

Citation databases allow you to set an alert so you can be notified as soon as a new citation is added to the database. Alerts enable researchers to track where, by whom, and how often an article has been cited. Create an author alert in citation databases such as Scopus or Web of Science.

- Scopus tutorial: Search for an author and set up an alert.

- Web of Science tutorial: Stay up-to-date

- Google Scholar: Alerts

- Beyond these, some subject-specific databases also provide citation linking.

The FWCI (Field-Weighted Citation Impact) and CNCI (Category Normalised Citation Impact) both calculate the ratio of citations received relative to the expected world average for the subject category, publication type and publication year. These metrics are very useful in applications as they put citation counts in context of the average citations for similar papers, more objectively showing that a paper is highly cited.

Many researchers find that the FWCI and CNCI metrics for the same article are different. This is due to differences in which journals and citing papers are indexed in each system.

Field Weighted Citation Index (FWCI)

The FCWI is can be found in SciVal, where it can be seen for each publication and as an average for all of a researcher's publications. FWCI can be added to most SciVal reports. In Explore, after setting up your researcher profile, to locate a list of FWCIs for each paper, select the Summary page, click on View List of All Publications, then select FWCI as the metric.

The FWCI can also be found in Scopus. where it can only be seen on individual publication records.

UWA authors can also view FWCI for their individual publications, when signed in to the UWA Research Repository:

- Click on the title of the relevant publication to open it

- choose the Metrics tab.

Category Normalised Citation Impact (CNCI)

The CNCI is calculated on Web of Science data, and is accessible using InCites (benchmarking & analytics). InCites can be used to find the CNCI for each individual publication, or an average for all publications authored by an individual researcher indexed in Web of Science.

In InCites, select to Analyze Researchers. Use the Filters to locate the individual researcher by ORCID, Researcher ID, or Name. If CNCI is not already visible, click Add Indicator to select it. The average CNCI across all papers will be displayed.

View and download a list of all the selected Researcher's papers, including the CNCI for each paper, by clicking the number under the 'Web of Science Documents' column.

Journal Normalized Citation Impact (JNCI)

The JNCI is similar to the CNCI but normalizes the citation rate for the journal in which the document is published. The JNCI of a single publication is the ratio of the actual number of citing items to the average citation rate of publications in the same journal, in the same year and with the same document type. The JNCI for a set of publications is the average JNCI for each publication.

Percentile (Web of Science/ Incites)

A percentile indicates how a paper has been cited relative to other Web of Science-indexed publications in its field, year, and document type, and is therefore a normalized indicator. Percentiles may provide evidence of impact such as "this paper is in the top 10% most highly cited papers in the field".

Find your article percentile

You can find the percentile for each of your Web of Science - indexed publications in Incites (Benchmarking and Analytics).

- Sign in to InCites.

- Select to Analyze Researchers.

- Narrow the displayed results to an individual using the Person filter on the left. Search by ORCID, Researcher ID, or Name.

- View and download a list of all the selected Researcher's papers, including the Percentile for each paper, by clicking the number under the 'Web of Science Documents' column.

- Alternatively, click Add Indicator above the table to view Documents in Top 1% or Top 10%

It is also possible to find the percentile for each of your Web of Science - indexed publications in Web of Science.

- Use the Researchers tab to perform a Name Search for yourself.

- Select your name from the results list.

- The Author Impact Beamplot Summary shows your overall citation citation percentile median in Web of Science.

- Click on the 'Open metrics dashboard to view the beamplot' link in your Web of Science record to see the percentiles for your individual articles.

To find highly cited articles on a particular topic, run a keyword search in citation databases such as Scopus or Web of Science. Sort on the search result by "Cited by (highest)" citations (Scopus) or "Sort by: Times cited: Highest to lowest" (Web of Science), so the most highly cited work is at the top of your search results.

This Scopus tutorial demonstrates the use of Scopus article metrics.

Altmetrics measure how many times a research output has been shared, mentioned or downloaded from online sources such as social media sites, blogs, mainstream media and reference managers. Find out more about Altmetrics.

The Altmetrics tools covered in this guide collect social and traditional media mentions, but there are some other sources you might like to check as well.

- The Conversation - If you have written for The Conversation you can log in to your profile to see a dashboard detailing metrics and engagement with your article(s) that may be used to demonstrate impact of your research.

- Google News - Try searching for your name or research output in Google News to see if it is appearing in any media.

- Capital Monitor - Search for your publication or your name in Capital Monitor to locate mentions in government documents and proceedings, for example references to your report in the Hansard. These would be excellent evidence of the impact of your work.

Policy citations are the number of times your research outputs have been mentioned in policy documents from government bodies, professional organizations or bodies, or non-government organizations (NGO). They are a good way of demonstrating how your research has influenced policy or practice in a particular field and support the development of your impact narrative beyond citations.

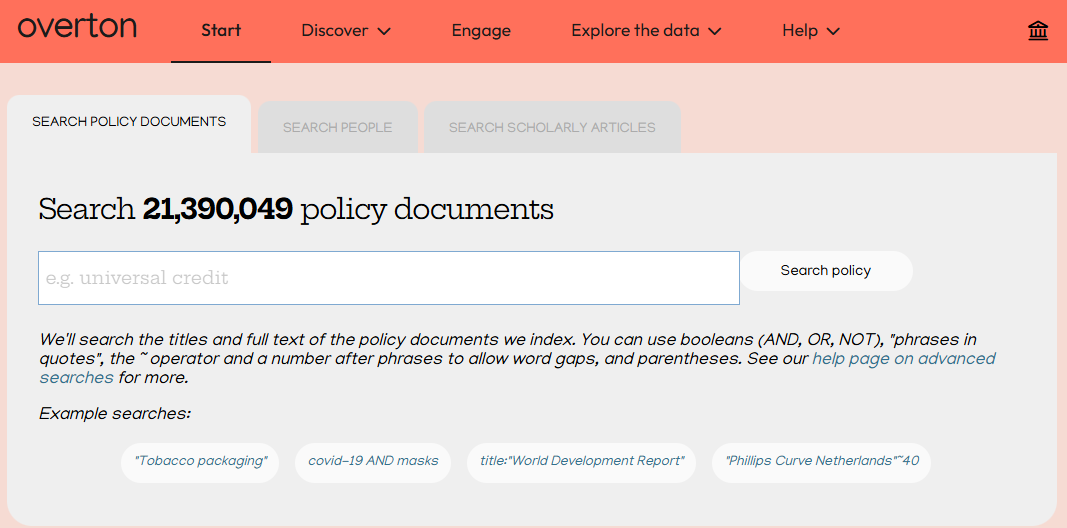

Overton is the world’s largest searchable index of policy documents, guidelines, think tank publications and working papers. Overton collects data globally from over 190 countries and in many different languages.

To search Overton directly:

- Access UWA’s subscription to Overton via OneSearch.

- Locate policy documents on your topic of interest using the default ‘Search Policy Documents’ tab.

- Search for all policy documents citing any of your research using the ‘Search People’ tab.

- Search for citations to specific research outputs in the ‘Search Scholarly Articles’ tab.

For more detailed instructions on how to search Overton, please see the Altmetric Sources > Overton section of this guide.

There are a few other tools you can use to track policy citations, all of which use data from Overton;

- Scival identifies and counts citations that research papers have received from policy documents. To access a policy report on yourself, follow these steps:

- Access SciVal through OneSearch. SciVal requires users to create a personal account - please use your UWA email address to ensure full access to subscribed content.

- Select Explore

- Click on Search Entities (top menu on the left hand side of screen) and search for your name. Note that if your name does not appear, you will need to Define a new Researcher

- SciVal will default to a Summary page. Scroll down the left-hand side menu and select 'Policy Impact'

- Select your date range.

- PlumX reports on policy citations. You’ll be able to see policy citations wherever you find PlumX Metrics, including Scopus, Science Direct, Digital Commons, SSRN, Engineering Village and Elsevier journal websites. The PlumPrint is also embedded at the research output level in the UWA Profiles and Research Repository. Search for a particular publication or browse your 'Research Output' list in the Repository and if available, hover over the PlumPrint to see whether any policy citations have been picked up. Click the 'see details' link for a full report and information on the source of the citation.

- Sage Policy Profile - A free tool for individual researchers and academics. Set up a profile to track and visualise how your research has been cited in policy from governments, think tanks, and policymakers across the world. Note, this tool uses your ORCID ID to identify your publications so make sure your ORCID profile is up-to-date. If you haven't already, connect your ORCID iD to your UWA profile for automatic updates to your ORCID profile when new outputs are added to the Repository.

Patents often cite research papers. If your research has been cited in a patent, this shows the connection between your work and industry or commercial activity.

Several tools collect Patent citations and information:

- SciVal identifies and counts citations that research papers have received from patents, and links to both the citing patents and cited articles

- Access SciVal through OneSearch. SciVal requires users to create a personal account - please use your UWA email address to ensure full access to subscribed content.

- Go to the Explore

- Click on Search Entities (top menu on the left hand side of screen) and search for your name. Note that if your name does not appear, you will need to Import a new Researcher

- SciVal will default to a Summary page. Scroll down the left-hand side menu and select 'Patent Impact'

- Select your date range.

- Lens.org is a database for articles and patents. Search by patents, scholarly works, profiles and more.

- Both PlumX and Altmetric track patent citations. Access via the PlumPrint and Altermetrics doughnut embedded at the research output level in the UWA Profiles and Research Repository. Search for a particular publication or browse your 'Research Output' list in the Repository and if available, hover over the icons to see whether any patent citations have been picked up. Click the 'see details' link for a full report and information on the source of the citation.

- Google Patents includes more than 120 million patent publications. A list of citing patents is included in the full text of the patent.

Book/book chapter

There is no one measure that captures the impact of books/book chapters across the board, and which measures are effective may depend on the type, discipline, content, format, publisher etc. You may find you need to use a combination of traditional metrics, altmetrics and other measures of esteem to fully reflect the value of your book in research, teaching and other professional activities. Various recommended strategies are detailed in the subsequent tabs.

Books and book chapters are increasingly indexed in databases, so it is worth checking to see if you can find citations metrics. Resources to check:

- Book Citation Index

See the Clarivate Master Book List for publishers that are indexed.

- Scopus (see Scopus Book title list for publishers that are indexed)

- Google Scholar

- Dimensions

If your book/chapter only has a few citations, consider looking more closely at who has cited your work. Are they a high profile researcher in your area? Have they reflected favourably on your findings?

Book reviews can be a good source of examples of impact in a field, especially if they’re from notable people in the field. To find more formal, ‘reputable’ reviews (meaning published reviews, rather than those written by members of the public), search in the following locations:

1. OneSearch indexes a vast array of content, including reviews. Search by book title and use the ‘Review’ filter to limit your results:

2. Established cultural magazines and publications are also good sources of non-scholarly but still reputable book reviews:

- Australian Book Review

- New York Times Book Reviews

- The New Yorker Book Reviews

- The Guardian: Books

- JSTOR

- Pressreader

- Project MUSE

- ProQuest

- ProQuest Ebook Central

- Scopus

- The Times Literary Supplement

- Web of Science Core Collection

3. Quantity can also be a useful measure (i.e. my book received over 200 reviews on Amazon). Check book review sites like Goodreads, Amazon and Google Books

There are many other ways to measure the quality and impact of your books and book chapters.

Libraries holding your book or the book you contributed to in their collections is another sign of impact. Useful metrics may be:

- The total number of libraries that hold your book

- Whether prestigious libraries hold the book, i.e., Bodleian Libraries, The Library of Congress, The National Library of Australia etc. Look out for significant libraries for your particular area/topic.

For holdings in international libraries, search WorldCat. Look out for different editions, which may have separate records!

For holdings in Australian Libraries, search the National Library tool, Trove, using their Books and Libraries filter. Look out for different editions, which may have separate records!

Here are other ways books can be received and used that can indicate impact and quality:

- Altmetrics (see the Alternative metrics tab), though note that altmetrics tools use ISBNs to track and collect information on books, and when an ISBN cannot be found, may search for different identifiers which can be for different editions and may result in metrics that aren't directly measuring the specific edition of the book

- If a book was published by a prestigious/reputable publisher – for example, it is an academic/university publisher? Is it on the WASS-SENSE book publisher ranking list?

- If the book resulted in publicity and events- Were you interviewed during the book release or invited to a signing or to do a talk?

- If the book has received prizes/awards

- Ongoing demand - If the book was republished, published in electronic format, or translated into other languages

- If the book has been used as a textbook by a school or uni

- Is it an Open Educational resource?

Report

Reports are rarely indexed in the large citation databases, Scopus and Web of Science. The UWA Profiles and Research Repository is indexed by Google Scholar which not only allows UWA authors to track citations within Google Scholar but increases the discoverability of UWA publications and the opportunity for citation. We recommend that UWA authors:

Add their reports to the UWA Repository, including a PDF copy if their publisher agreement allows, or the link to its online location.

Google Scholar will only index publication records which include an abstract, and may preferentially index papers with full text available (Google Scholar Help, 2022).

If the full text of your report can be made available through the UWA Repository, the number of times the report has been downloaded will be displayed in the Repository which can be a useful measure of engagement.

Request a Digital Object Identifier (DOI) from the Library as soon as possible so that "altmetrics" such as mentions of the report on social media can be tracked accurately using the DOI. These will appear next to your report in your Repository profile as colourful visualisations from PlumX and Altmetric.com. Request a DOI through Service Desk.

Search for your report in Capital Monitor to locate mentions in government documents and proceedings, for example references to your report in the Hansard. These would be excellent evidence of the impact of your work.

Non-traditional Research Outputs (NTROs)

Non-traditional research outputs (NTROs) are scholarly works or creative endeavours that go beyond traditional academic publications like journal articles and books. NTROs are typically produced by researchers in disciplines where non-textual forms of expression and dissemination are valued, such as the arts, design, music, film, and digital media.

Measuring NTROs can be challenging due to their diverse nature and formats and that they are typically not indexed by academic databases and therefore do not receive citation counts. Altmetrics tools have made tracking easier for some NTRO outputs, but rely on the work having a persistent identifier (PID) which is used in all communcations around that output. Read more about PIDs for publications and how to apply for them in our How to Publish and Disseminate Research guide.

Other possible measures of quality and esteem that can help when building a narrative around engagement and impact are listed below by output type.

- Architectural and environmental design

- Creative writing

- Curated or exhibited event

- Live performance

- Music composition

- Recorded work

- Visual arts

Measures of quality, esteem and engagement include:

- Awards and prizes

- Reviews

- Commissions

- Collaborations

- Exhibitions

- Impacts such as sustainability, occupant health, liveable cities

Measures of quality, esteem and engagement include:

- Reviews

- Editions and translations

- Prestige of the publisher or publication

- Awards, prizes and nominations

- Best seller listings

- Sales or download figures from the publisher

- World-wide and Australian library holdings

- Esteem of the publisher and/or editor

- Media mentions, including traditional and social media

Measures of esteem, quality and engagement include:

- Invitations to curate

- Invitations to exhibit

- Commissions

- Prestige of the venue

- Reviews

- Visitor numbers

- Website visits

- Sales data

- Catalogue sales and downloads

- Media and news mentions, including traditional and social media

Measures of esteem, quality and engagement include:

- Invitations to perform

- Commissions

- Audience numbers

- Prestige of the venue

- Reviews

- Media and news mentions, including traditional and social media

Measures of quality, esteem and engagement include:

- Prestige of publisher or publication

- Performance of the work and prestige of the performer

- Reviews

- Awards, prizes and nominations

- Sales or download figures

- Commissions and grants

- Library holdings

- Information about accompanying work (e.g. performance; production of film soundtrack)

- Media mentions, including traditional and social media

Measures of quality, esteem and engagement include:

- Reviews

- Sales, downloads, streaming, playlist data

- Prestige of the record label

- Prestige of the record producer

- Media mentions, including traditional and social media

- Inclusion in compilations

Measures of quality, esteem and engagement include:

- Commissions and grants

- Invitations to exhibit

- Inclusion in exhibition catalogues

- Collaborations

- Awards and prizes

- Sales data

- Media mentions, including traditional and social media

- Artist-in-residence programs (public and community engagement)

Data

Just as for other research outputs, use of your data by other researchers can be described when discussing your research performance.

Data citation is an emerging practice. "Data citation refers to the practice of providing a reference to data in the same way as researchers routinely provide a bibliographic reference to outputs such as journal articles, reports and conference papers. Citing data is increasingly being recognised as one of the key practices leading to recognition of data as a primary research output."

Australian National Data Service (ANDS) data citation support material

Resources

Halevi, G., Nicolas, B. & Bar-Ilan, J. (2016). The Complexity of Measuring the Impact of Books. Publishing Research Quarterly 32, 187–200. https://doi.org/10.1007/s12109-016-9464-5

Mills, K., & Croker, K. (2020). Measuring the research quality of Humanities and Social Sciences publications: An analysis of the effectiveness of traditional measures and altmetrics. https://doi.org/10.26182/v063-r898

- Last Updated: Sep 16, 2025 5:02 PM

- URL: https://guides.library.uwa.edu.au/researchmetrics

- Print Page

CONTENT LICENCE

Except for logos, Canva designs, AI generated images or where otherwise indicated, content in this guide is licensed under a Creative Commons Attribution-ShareAlike 4.0 International Licence.

Except for logos, Canva designs, AI generated images or where otherwise indicated, content in this guide is licensed under a Creative Commons Attribution-ShareAlike 4.0 International Licence.

Staff & Students

The University of Western Australia

PRV12169, Australian University